In the previous blog for this series , we looked at the overall process of reporting an insurance claim at a high level . As promised , within this installment of the blog series will concentrate on first part of the process i.e. FNOL ( First notification of loss) by the policy holder , and how we can look to apply Azure cognitive services and related technologies (MS Flow,Power platform et al) to provide a value add to the business process.

The first loss of notification can either be done by the policy holder directly or by the appointed Insurance claim representative on behalf of the policy holder.

In this instance, while trying to move more and more towards the model of self-service supported by “digitization” being brought into the grand scheme of things , lets assume that the policy holder would directly notify the insurance company via an app provided by the Insurance company to report the loss and provide relevant details and upload associated images for the accident via the phone camera . This app could be built in PowerApp wherein the uploaded images are added to the document management , in this case it being Sharepoint . The images are then in parallel submitted to Azure Cognitive Services – computer vision API , which in turn analyses /interprets the image(s) and provides a textual description of the submitted image(s). The image of the licence plate can be interpreted by Azure OCR API – which can then be used to look up the corresponding account record in D365 CE and automatically create a case against the account !

The whole exercise of being able to interpret the images via combination of cognitive services enables :

- Helping the insurance claim advisers to understand the details of the incident in addition to the narrative provided by the policy holder and those involved in the incident via the textual analysis of the crash image

- Extraction of description text of the crash image can also be used by reporting systems or other in-house systems for further trend reporting and analysis by making the description text part of the internal overall “big data” held within the Insurance company.

- Speed in customer service – by automatically creating a case based on the registration plate image analysis using Azure OCR API , even before a detailed conversation is had with the concerned customer.

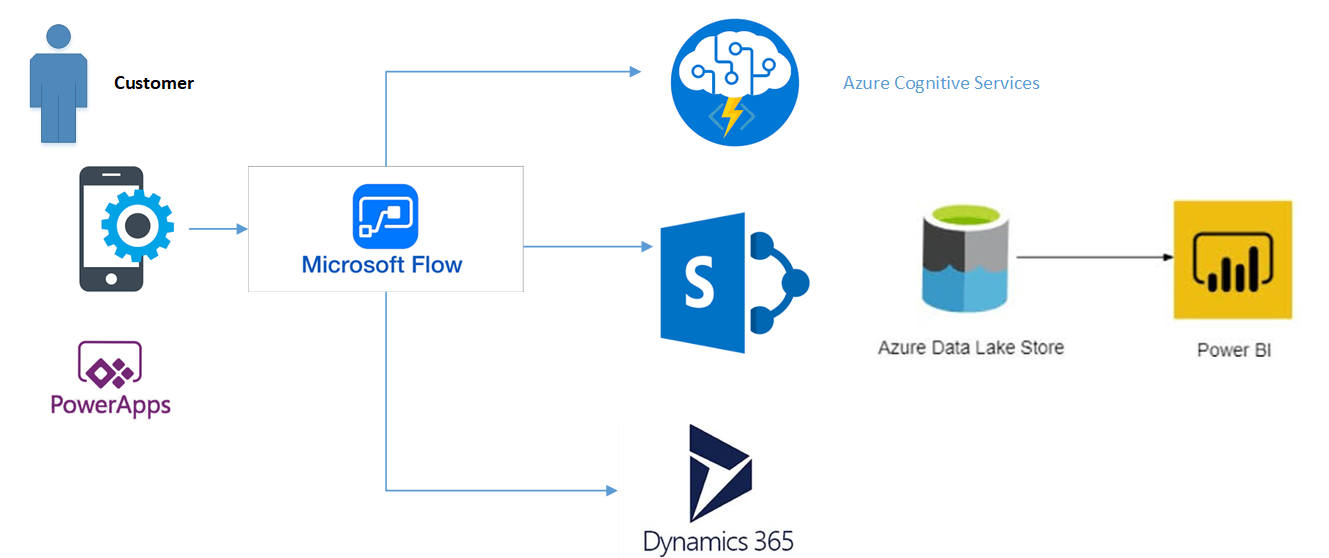

The overall architecture would be as below :

- The Microsoft PowerApp based mobile app will be used to capture the image via smartphone camera

- Microsoft Flow would then be triggered in the background which would in turn :

- Supply the crash image to the Azure Computer vision API (Describe Image Content)

- Upload the crash image to company’s sharepoint

- Supply the registration plate image to the Computer Vision API ( OCR Text)

- Upload the registration plate image to company’s sharepoint

- The registration text returned from the OCR API would be used to search for the account in D365 CE

- A new case will be created against the returned account based on the additional details supplied via the app ( along with the supplied imaged held in Sharepoint linked to the newly created case)

- The Flow can potentially also be used to supply the output from the Cognitive services directly to Power BI ( for futher analysis or input to any machine learning algorithms in the background)

The screen for the Mobile App created using Canvas Power App platform is as below ( Note : I do not claim to be a UI expert or a Power Apps Pro as this stage , so please excuse the odd colour scheme or “lack of finesse” in the UI design :))

The associated flow that would run is the background , once the customer submits the details and associated images is as below :

Most important thing to note – all of the above starting with the mobile app to the flow based logic running in the background leading to creation of the case in D365 has been carried out without writing a single line of code ! (if the expressions in PowerApps do not qualify as code) I have so far kept true to the promise of keeping this as “no code” as possible. I hope to continue the trend as we try and automate the process further down the line